VFX is one of the most important parts of many types of productions whether it be movies, TV shows, or Commercials. Blender is a 3d software that can do professional work but is it really good when it comes to VFX. To answer this question we are going to break things down so anyone can understand what we are talking about.

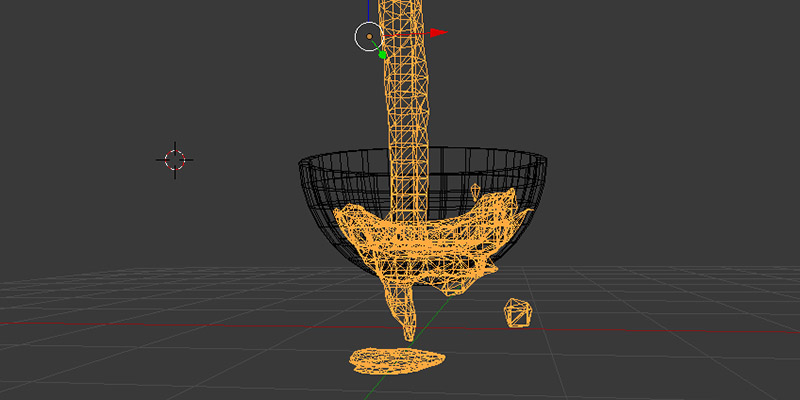

1_Particles system

Blenders Particle systems are used to simulate large amounts of small moving objects, creating phenomena of higher-order like fire, dust, clouds, smoke, or fur, grass, and other strand based objects.

Each particle can be a point of light or a mesh, and be joined or dynamic. They may react to many different influences and forces and have the notion of a lifespan.

Hair type particles are a subset of regular particles. Hair systems form curves that can represent hair, fur, grass, and bristles. But hair in Blender is not as advanced like we can find in other software or the add-ons that are used in the video game or VFX industry.

Blender’s particle system can be used to create high-quality visual effects like fire, smoke, dust, blizzards and so on as we have it done in the man in the high castle show VFX done by Barnstorm VFX studios that have integrated Blender in their pipeline.

Just to be fair here, I believe that other than Houdini most software that are used in the VFX industry use plugins or add-ons in addition to the tools that come with the 3d package to do a lot of effects like fire, smoke, fluids and so on. They use powerful plugins such as Fumefx, phoenix FD, Krakatoa, thinking particles and so on.

And the best Developers don’t make their add-ons for Blender because of its open-source nature, and the GPL license which makes the source code for their tools available for others to use.

But Blender right now has a good particle system that can be used for VFX none the less.

2_Rigid body simulation

The rigid body simulation can be used to simulate the motion of solid objects. It affects the position and orientation of objects and does not deform them.

Unlike the other simulations in Blender, the rigid body simulation works closer with the animation system. This means that rigid bodies can be used like regular objects and be part of parent-child relationships, animation constraints, and drivers.

Blender rigid body physics is great for flat surfaces or for a single point of contact collisions like a coin falling onto a surface or a cube bouncing.

but rigid body physics struggles with curved surfaces in full contact with other curved surfaces.

Using Rigid Body Constraints allows you to simplify this by enforcing any relationships between objects without having to rely on the microscopic interactions of the surfaces. This can generally produce a ‘good enough’ simulation for your needs.

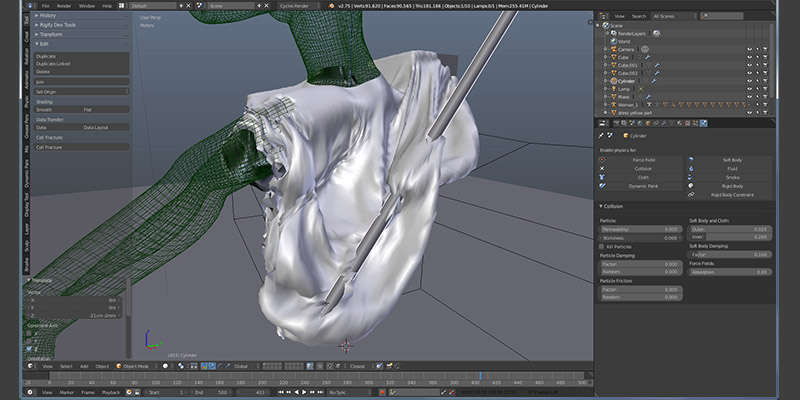

3_Soft body simulation

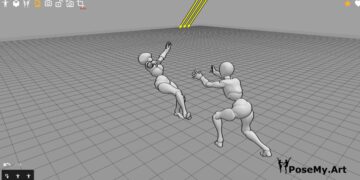

Soft body simulation is used for simulating soft deformable objects. It was designed primarily for adding secondary motion to animation, like jiggle for body parts of a moving character.

It also works for simulating more general soft objects that bend, deform and react to forces like gravity and wind, or collide with other objects.

The simulation works by combining existing animation on the object with forces acting on it. There are exterior forces like gravity or force fields and interior forces that hold the vertices together. This way you can simulate the shapes that an object would take on in reality if it had volume, was filled with something and was acted on by real forces.

Soft bodies can interact with other objects through Collision. and They can interact with themselves through Self-Collision.

4_cloth simulation

Cloth simulation is one of the hardest aspects of CG because it is a deceptively simple real-world item that is taken for granted, yet actually has very complex internal and environmental interactions. After years of development, Blender has a very robust cloth simulator that is used to make clothing, flags, banners, and so on. Cloth interacts with and is affected by other moving objects, the wind, and other forces, as well as a general aerodynamic model, all of which is under your control.

Rich Colburn created Modeling cloth addon in 2018 which is an interactive cloth simulation engine. Using You can click and drag, add wrinkles, shrink cloth around objects. mix softbody and cloth effects together and so more. Overall this addon made creating cloth easier and faster similar to Marvelous designer does.

Hopefully, with the recent update to the cloth simulation system, we will be able to simulate cloth faster and with better results using Blender.

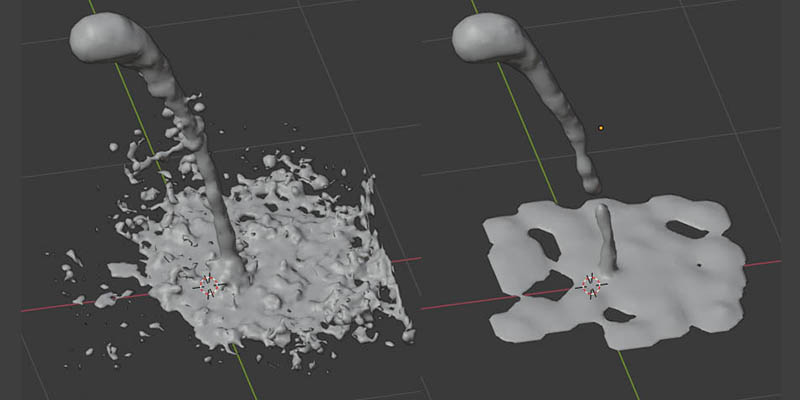

5_Fluid simulation

Fluid physics are used to simulate physical properties of liquids especially water. While creating a scene in Blender, certain objects can be marked to participate in the fluid simulation. These can include but not limited to, being a fluid or as an obstacle. For a fluid simulation you have to have a domain to define the space that the simulation takes up. In the domain settings you will be able to define the global simulation parameters (such as viscosity and gravity).

6_Motion tracking

Fluid simulations in Blender don’t have the best reputation among 3d artists because it was not that great in the past, but with the new updates things seem be better compared to the previous fuild simulation system, also there are new add-ons in the marketplace for this purpose that can do a good job which is a great thing because this give Blender users the freedom to use the tools that work best for them.

Motion Tracking is used to track the motion of objects and/or a camera and, through the constraints, to apply this tracking data to 3D objects (or just one), which have either been created in Blender or imported into the application. Blender’s motion tracker supports a couple of very powerful tools for 2D tracking and 3D motion reconstruction, including camera tracking and object tracking, as well as some special features like the plane track for compositing. Tracks can also be used to move and deform masks for rotoscoping in the Mask Editor, which is available as a special mode in the Movie Clip Editor.

Blender’s motion tracking tools are good enough to create professional camera tracking for your shots because at the end of the day you are using the software. But once again nuke is used a lot in the industry to create award-winning files for visual effects.

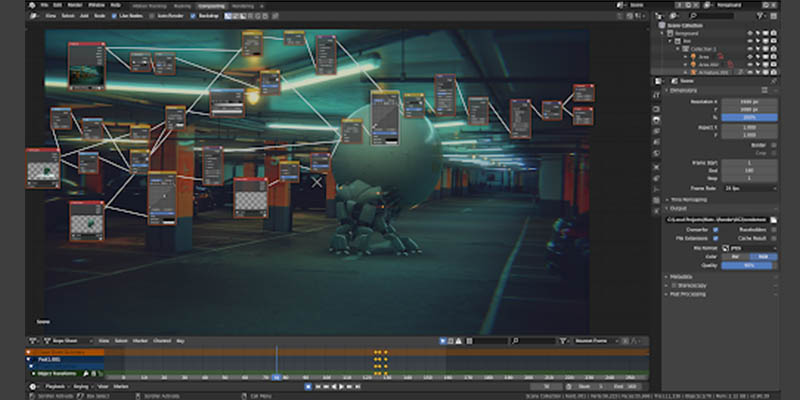

7_Compositing

Compositing Nodes allow you to assemble and enhance an image (or movie). Using composition nodes, you can glue two pieces of footage together and colorize the whole sequence all at once. You can enhance the colors of a single image or an entire movie clip in a static manner or in a dynamic way that changes over time (as the clip progresses). In this way, you use composition nodes to both assemble video clips together and enhance them

.To process your image, you use nodes to import the image into Blender, change it, optionally merge it with other images, and finally, save it.

Blender is good for compositing but for the most part studios use nuke for compositing because it is the industry standard and most professional artists use it to get their work done. Even Barnstorm Vfx studios that are know to use Blender I believe still use nuke for compositing.

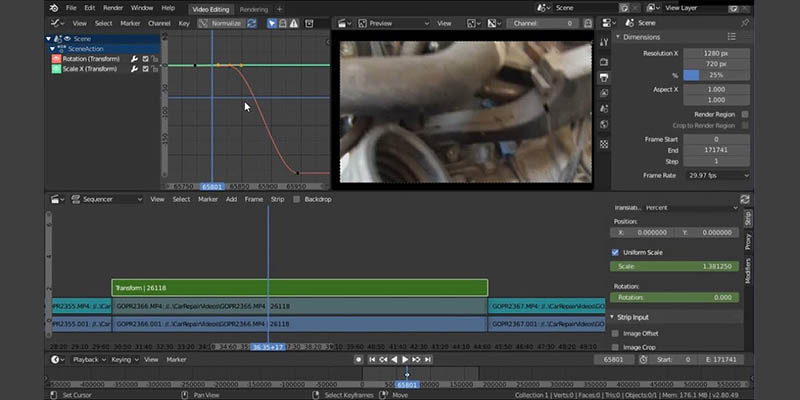

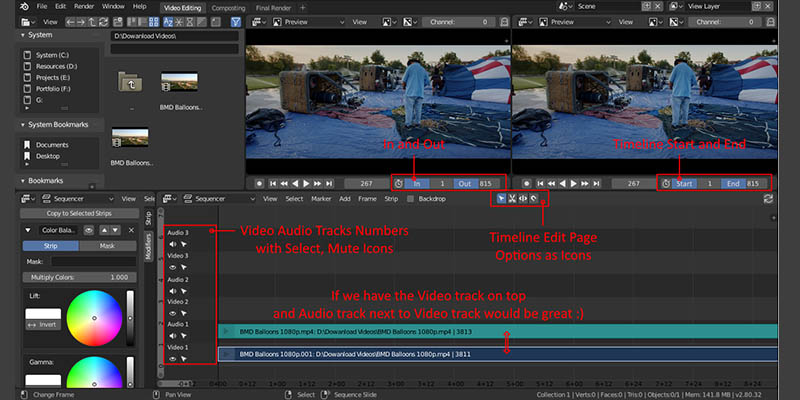

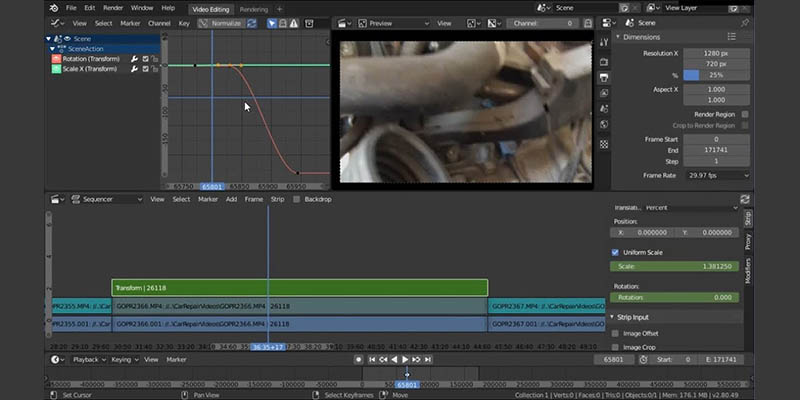

8_video sequencer

The Sequencer within Blender is a complete video editing system that allows you to combine multiple video channels and add effects to them. You can use these effects to create powerful video edits, especially when you combine it with the animation power of Blender!

To use the VSE, you load multiple video clips and lay them end-to-end (or in some cases, overlay them), inserting fades and transitions to link the end of one clip to the beginning of another. Finally, you can add audio and synchronize the timing of the video sequence to match it.

The Blender video sequencer is good for all basic editing and it has all the basic functions, and in addition, what Blender can do it makes its video editor unique in its abilities compared to the other editors. Actually the blender video editor was not intended to be a powerful video editor such as Adobe premier or something like that, it was just created to complement blenders compositor and make Blender a unique independent all around open-source software.

Blender as a 3d package has everything that a VFX artist or VFX studios need to get the job done, but there are a lot of other tools and 3d software available that make good or even better job than Blender in some aspects of VFX production.

Recently Blender is being recognized and used by a lot of artists to do visual effects, also it is being used to do solid work by some studios like goodbye Kansas and Barnstorm Vfx studios as we mentioned before to work on some cool shows such as the man in the high castle and silicon valley.

Blender has a long road ahead of it terms its usage in the VFX industry, right now it is making progress but there are some milestones it needs to cross to get its share of professional Vfx work due some reasons that we talked about in a different video, but compared to where it was before now it is in a much better place.

If you want to earn more about VFX there are a lot of tutorials and course online that can show you all the basic techniques needed to create decent work, also there are a lot of VFX schools that teach you how to create industry quality visual effects but it is all up to you if you want to go to school or be a self-taught artist. Because studios don’t care about the way you learned to create visual effects they only care about what you can do for them and if you can help them get things done better and faster.

Also, the software is not a big of a deal, there is a big chance that if you find a job, the studio is not using Blender. if you created greate work using Blender and you understand the basic concept and you are a fast flexible learner they will teach or give you a chance to learn other software which is something artists so all the time.